.png) Search engine optimization is a process. Which is done for search engines. If you are a blog or website owner, or a content creator who wants to reach people. Or are you an expert in a particular field, then SEO can help you. If you want to spread your knowledge in the world. So first of all you need a blog or website.

Search engine optimization is a process. Which is done for search engines. If you are a blog or website owner, or a content creator who wants to reach people. Or are you an expert in a particular field, then SEO can help you. If you want to spread your knowledge in the world. So first of all you need a blog or website.

So that people can read and understand it. They can also give feedback on what people think about it. All these processes are called SEO. The full form of SEO is Search Engine Optimization.

SEO is done for the rank of any blog or website. This is SEO Basic Guide, which gives the necessary information to learn SEO Basic Tutorial for beginners. So that beginners can start their search engine optimization journey.

If you have mastered SEO then there is nothing for you here. This SEO guide is for beginners. Those who are just starting search engine optimization. Next we are going to get detailed information about SEO. So that you can have complete information about it.

Let us tell you that this area is very big. None of the information here is final. This is a changing field. You need to learn continuously. So let's talk about what is search engine optimization? what works? If you have a blog website, here's how it works?

What is SEO

Search engine optimization involves a continuous effort to rank highly for relevant search terms and phrases. Thereby optimizing website content for search engine result pages.

SEO is emerging as a golden opportunity in present to gather more number of new and frequent users on the website. It is an important part of digital marketing. Which has become a major source of reaching people from all over the world and bringing them in the form of website traffic.

As the name suggests, optimization for search engines. We optimize our website for Google, Being, Yahoo and other search engines. So that the search engine can get complete information about our website. Google is the most used search engine. So we understand SEO according to Google.

SEO means that search engines can easily access your website. It can read and understand your content and your website. Search engines should not be obstructed.

The better search engines can understand your content, the more accurately search engines will be able to display them in the results page. No such shortcut tips have been given here, so that your website comes first in the search engine result page.

We will try to improve our site by adopting best practices. This is a process we do on our website. This requires patience. How long this will work cannot be decided. We need to make continuous efforts. To make any website SEO friendly, you may have to make small changes in your site.

We make these changes keeping in mind the guidelines of search engines. These improvements make your website search engine friendly. Which is very important. This change can forward in your search.

Along with this, you should also pay attention to the design of your website. Users want to access a clean, fast-loading, and well-organized site. This can be very important in terms of user experience and organic results on your site.

Your website needs constant work to provide a better experience for search engines and other users. Know further how the website reaches the user.

Why SEO is important

Any content creator wants to reach as many people as possible. which is a complex task. Google is the most used search engine. Billions of people use this search engine every day. That's why it becomes necessary.

That whenever a user searches related to our content. So we can deliver our content to that user. For this we want our ranking to come first on the search engine. We have to do SEO of our content to come first.

If our content is related to the user's search. So it will appear in the search result. Through search engine optimization, you can reach more and more people. The faster we rank by optimizing our site and content, the more users will reach our website.

Search engine optimization is required to bring more and more users to your website. SEO plays an important role in ranking our website better and getting higher organic results.

The full form of SEO is search engine optimization. Which is done on the web site. The best practice of optimizing a website for search engines is called optimization. This is the search engine's best effort to drive more users to your content without paying anything.

If you are planning a new web site or making some important changes in the existing web site. So you have to adopt search engine optimization practices if you want to improve your web site on the result pages and increase organic traffic on the web site. So SEO should be used till the end goal. Which can get better results.

How to search engines work

We need to know the general working of search engines. And their way of working should be explained. Search engines are always looking for new and relevant information. We should adopt their basic principles and make future strategy.

Search engines like Google, Yahoo, Bing etc. work according to their work. Search engine means to reach a search to the right result. Google is the most used search engine. The way a search engine works is that it first finds and crawls the web page, and then includes indexing and displaying it in the search results.

Your priority as a content creator should be to help search engines crawl and index all of your web pages. All this work is necessary for the search engine. That should not prevent it from crawling and indexing your page. This task is defined as technical SEO.

- Crawler - This search engine has its own robots, which continuously search, read and index new and updated pages.It works by crawling and indexing updates and other published pages on all types of websites. This is usually a software. All search engines give their names, such as Google's crawler Googlebot.

- Crawl - Calling means which search engine has new and old pages. On which some material has been removed or added. It follows the links. After reading on one page it also crawls the other page.

- Index - Indexing is a process. Search engines are constantly looking for new content. which they do not have. You have to make sure that your content is fresh and full of information.

Search engines read and index the content before indexing the page. When Google gets information about a new piece of content or website, it reads and indexes it. This is called the indexing process.How to show site in search Results

The first thing to do after creating any website is to appear in Google's search results. Which is called site indexing. First of all you have to make sure that your site is indexed or not. If your site is not indexed then there is no shortcut for it.

Google indexes a lot of pages every day. Your site may not have been found by Google. Be patient for this. This may take time if your site is not linked from any other website. And when your site is found it will be indexed.

- Check if your site is indexed

site :yoursitename.com

- Website indexing - To speed up website indexing, add your website to Google Search Console and submit your website sitemap. The information of this website will reach Google. It depends on google. If you don't know about sitemap. Then we'll talk further. You stay with us.

- Sitemap - An easy and sure way to make your website visible in search Adding a sitemap is an easy and sure way to make your site visible in search. Naturally it will take time.

- Adding content - You should keep adding content to your website continuously. Keep in mind that the content must be new and of use to the user. Fix your website speed, and make sure the sitemap is submitted properly.

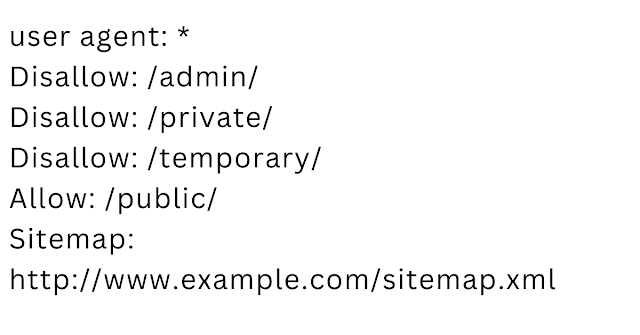

- Using Robots.txt - Robots.txt is a file that prompts search engines for certain pages and parts of a website. This file is written to the root directory of the site. And its name is robots.txt only.

Through this file crawlers are indicated which pages of the website to call and robots dot txt is used to prevent sensitive and unwanted crawling. If you have pages on your website that you don't want to be crawled.

Or there are pages that are not useful to the user. So you can block them with robots.txt. This file just gives a hint to the crawler. The page may still be crawled.

Explaining content to search engines and users

When you tailor your content to users. So search engines can access your content. Make sure all files, css, javascript can be accessed correctly for search engines to understand and index your page context. Any interruption may hinder the understanding of the contents of the Website. And can make a big impact on the ranking of the website.

Make your title unique - The user searches on the search engine to get information related to his subject. If your title is not correct and related. So he will leave it and go to another website. Your page title is the title shown to the user in the search engine results page. All pages on the Site may not have the same title.

Using tags - Tags are such a phrase. Through which the search engine tells about the page present on the website. What is this page about or what information is on this page. In this, the main information related to the information of the page is told through the language of HTML. Which is understood by the search engine. The main title tag of your website is written at the top of your HTML.Advantages of tags

The tag is the title of the page. This tag therefore becomes important. Because search engines understand the entire website and the content on the page with this tag. And show it in the results of google search.

This title is displayed to the user as a snippet. Due to which the user visits the website. If your title doesn't match the context of the page. So Google may display other text based on the user's query. This can be any part of your page's content.

- Title use - If you are writing any content. So all pages should have their own separate Page title. so that you can see the page content in an organized manner.

This helps the user understand the context of the page. Try to write the title of the page in precise and short words. But this does not mean at all that give incomplete information in the Heading.<title>your main title here<title/>

- Use description tag - Description tag is like title tag. Some changes have been made in this. Title is longer than tag with more words. The maximum length of which is 150 words. It is implicit for website and page.

<meta content='your discriptionhereupto150wordonly' name= 'discription'/>

The description tag is different for all pages. The use of this tag is to tell the user and search engine a summary of the page in 150 words. About which more correct and accurate information can be had. This can make a big contribution to the ranking of the web site.

- Use of structured data - We have been talking about search engines and content since the beginning. Schema markup is the link between these two. Structured data is one such code.

Which only helps search engines to better understand the contact on their page. This helps search engines understand the important parts of our page. And in turn the search engine uses that structured data to present search results on the result pages. Which can attract the attention of the user.We also call this as rich result. There are several types of structured data. Which includes Article Structured Data, Website Structured Data, Organization Structure Data etc. We are going to give complete important information about structured data in another post.

- writing material - If you are writing a blog. So content is important to you. Content is an important part of the website. For which users come. Only the content of your website can get you a higher position.

And can attract other users towards itself. Simply put, content is the backbone of any blog. Also, making your business interesting and user friendly should also be your priority.

Any user comes to your website in search of new accurate and interesting information. Users decide how useful they are after reading the content on the website.

- Use the link - A website consists of many different pages. On which many contents are written. Which deals with different subjects. the post you are writing. You can add a link to another related page in the text on it.

From which the user can go to another page. These links can take the user to your other pages. These links can be from other pages on your website. Make sure that the text on which you are giving the link is related to it. So that Google can easily understand what this link is about.

- Image optimization - Your blog posts should contain images. You will select one such image. So that users can understand about your content. It is correct from the point of view of SEO to put the related photo in the blog post.

This is one of the basic methodology of SEO. Large and heavy images can increase load times. If you are taking photos. So it should be optimized as per the guidelines of SEO.

We can tell the search engines what it's about. Search engines can easily understand. Here optimization is concerned with alt text. Most of the search engines support jpeg, gif, png, bmp formats on the browser.

- Mobile friendly website - Today mobile network has been made all over the world. It is one of the vital needs of the people. Everyone has their own mobile in hand. Also search on Google using mobile device for any information.

Therefore, it is necessary to create a mobile friendly site to increase the presence of your blog website on the internet. This will provide better user experience. It should be ensured that people using mobile tablets and multimedia phones see all elements clearly and orderly, such as navigation.

Since late 2016, Google has included guidelines for making and ranking a website mobile-friendly.

Technical SEO checklist and Techniques

Technical search engine optimization is the most important process. This is most important in SEO. The very first process to use technical SEO is to optimize your website. This is the backbone of SEO. Technical SEO includes website design and tasks required by search engines.

Which makes any search engine understand all the main information of the website. Search engine optimization is not possible without technical SEO. We keep this in mind while building a website. Because we give special attention to technical SEO before any other activity.

All other efforts can be simplified by using technical SEO properly. This is done entirely on the website. Which is only for search engines.

- Technical SEO can help any search engine to understand the website.

- This has the potential to greatly affect the performance of any website.

- If the website pages are not search engine friendly. So they will not appear in the search engine results. No matter how much you try.

- Users of your website will be hindered in their use.

- This can greatly reduce the traffic, greatly affecting the performance of your website.

- Users do not want to see a slow loading website.

- Huge decline in users opening on mobile.

- Trouble understanding search engines.

Crawling - Technical SEO can affect the crawling of the website. This is the first step of Technical SEO. Its proper use on the website increases Crawling, Indexing and CTR. And new users get a boost.

Mobile friendly website - This is the process at the time of website creation. While building the website, we make the website mobile friendly. Due to which the mobile user gets a better experience. We also call it website architecture.

Due to which all the pages are linked to each other. A systematic site structure helps to understand the content on the website. The first step in a better structure should be that all pages are present (linked) on the main page and not confusing in any way.

Right now we are taking general information about Search Engine Optimization. From which we are taking basic information of SEO according to SEO Starter Guide. We are learning what SEO fundamentals are. How it works and what happens in SEO. This will give a brief overview for beginners. Do tell us what is your opinion on this.

Keyword research - Keyboard also has a role in SEO. Keywords are words that are related to the user's query. Which shows our website in the search result. We must have a unique keyboard for our website.

Which can explain to the search engine what keywords we are looking for. When doing keyword research, find keywords that have an acronym. These are considered favorable for the website.

Apart from the keyword website these are also selected for your post. A list of relevant keywords is prepared and used in the post. So that search engines can understand it better. Have been using keyword research for a long time. It matters to your content.

search engine friendly permalinks - This is part of technical SEO. Permalink is also called URL. Both are same Permalink means URL of a website or post. With the help of which that website and post can be understood.

This becomes important for crawlers. Because it is a part of SEO. Any permalink does not have any direct effect on the ranking of the website.

While creating permalinks we should try that they should be short and descriptive. Cluttered permalinks can be difficult to understand. Always use short permalinks according to the content of your page.

Breadcrumb List - There may be a problem while navigating with a website. In the absence of breadcrumbs, the structure of the website may be unusual.

Having breadcrumbs on a website can help organize the order of pages for a business. Also the status of the pages can also be known.

This becomes important from the user's point of view. Can help provide users with a better website experience. This allows users to navigate. and go through all the pages of the breadcrumb list one by one.

page speed - It simply means that the fastest opening page is important in terms of SEO. Users tend to leave web pages that open late. This can cause your ranking to drop. And traffic loss is possible. Before launching the website, make sure that the page is loading fast on desktop and mobile.

This is an important ranking factor. When it comes to opening fast, it has been observed that the website whose page speed is checked. She scores over 90 on the desktop version and page speed scores over 80 on the mobile version.

A page with good speed is considered. You can use Google Page Speed Tool (Google Page Speed Insight) to identify website speed and improvements.

Mobile friendly - Google has switched to Mobile First indexing. Webpages that do not support the mobile version become irrelevant. Make sure all pages of the website are optimized for mobile. Use Google's Mobile Friendly Test to check whether it is mobile optimized or not.

Site map - There should be a sitemap on your website. Sitemap is important. This is a list of links on your web pages. So that the crawler can crawl and index all the pages effectively. what is sitemap.

Robots.txt - This is a file. Which is written in the root folder of the website. It signals to crawlers how to treat our website. The Robots.txt file helps prevent crawlers from crawling those pages. which we don't want to crawl. Make sure you haven't accidentally blocked any user created pages from gaining search rankings. Because it can block SEO.

Canonical tag - When for some reason another url of the webpage is created. So search engines may have a problem deciding which page is important. which can be indexed. For this the canonical tag is used to display the important page.

SSL Certificate - An SSL certificate is an indication that a website is secure. The website must be served with an SSL certificate. This is an attempt to improve the ranking. Such a certificate instills a sense of security to the user. Here it is displayed with 'HTTPS' in place of 'HTTP'.

Structure data - Search engines understand Structure data very well. Which is called schema markup. Schema markup is code that helps search engines better understand the important parts of the content.

This schema markup makes your content appear better and differently in search engine result pages. Remember that this schema markup is not a direct ranking factor. It is just a medium to present the content in a better way.

Broken link - It's natural to have links on your website. But having Broken Links can affect the SEO of the website. Keep checking these links from time to time and remove the existing Broken Links from the site.

404 page - A website consists of many pages. Which contains different types of material. Maybe after some time that post has been removed from the website. or deleted.

He doesn't exist anymore for some reason. So the URL of that page remains present on the Internet. If the page is not found by the crawler, it is displayed as a 404 on the website.

This may confuse the user. The website should not have such pages. If created, you can redirect them to another URL.

keyword density - If you are starting a blog, then knowing keyword density is very important. Before writing this blog post, there has been a lot of talk about keyword density.

On which the article is written. We proceed with one main keyword. Also take your focus keyword with you. Keyword density refers to the number of times a given keyword has been used.

Include your man keyword

Keywords are the words your web page is about. Your focus keywords should be in important parts of the webpage. Which is user friendly and search engine friendly. A webpage mainly consists of some places. Where keywords are used.

- title - It is written in between 20 words to 65 words. Where pages are written from the entire lecture.

- h1 tag - This is the most important and first title of the page. Where the complete sentence is written about the page.

- Description - Each page has its own details. Which can be written up to 150 words. In which the focus keyword is used.

- URL - Use focus keywords about the webpage.

The main aspects of SEO are as follows

Voice search - It has become more easy and common for the users. With access to smartphones, voice search is becoming more popular. i.e. there is a need to use content that can provide relevant content using long query keywords. Voice search made easy with Smart Speaker and Voice Assistant.

User experience - How important is the website and its content to visiting users? It's important to make sure. The first priority of the website owner should be to provide complete useful information to the users. To improve the user experience the website should be optimized for navigation and fast loading times and on devices.

E-A-T - Google is giving special emphasis on some important factors while ranking any business. In which E-A-T is the main one.

E-A-T is concerned with the relationship between the expertise, authority and credibility of the website owner. Google will continue to use it in ranking it.

Which will be optimized on E-A-T. They will have more chances of getting a good rank.

Link building - It is an external effort. Link building is an important aspect of SEO. Establishing high quality backlinks for your website can be a better SEO factor.

In which link building should be done in a natural way from the official website. Note: Buying, exchanging and any questionable deals should be avoided while creating the link.

Local SEO - This is one aspect of SEO. Local SEO has become more important with increasing mobile usage. Google Local Pack shows top three local search results first in SERP for any query.

Which includes name, address, mobile number. This is an important option. Have a Google My Business presence to appear in local business searches.

By following this Beginners SEO Starter Guide. it can be expected that our website is search engine ready. These businesses will provide a positive user experience. In the journey of SEO, regular SEO activity and changes were made as required for better performance of the website.

.png)

.png)

.png)